Use the following workflow to install CTERA Portal.

- Creating a Portal Instance.

- Configuring Network Settings.

- Optionally, configure a default gateway.

- Additional Installation Instructions for Customers Without Internet Access

- For the first server you install, follow the steps in Configuring the Primary Server.

- For any additional servers beside the primary server, install the server as described below and configure it as an additional server, as described in Installing and Configuring Additional CTERA Portal Servers.

- Make sure that you replicate the database, as described in Configuring the CTERA Portal Database for Backup.

- Backup the server as described in Backing Up the CTERA Portal Servers and Storage.

Note

You can use block-storage-level snapshots for backup, but VSAM snapshots are periodical in nature, configured to run every few hours. Therefore, using snapshots, you cannot recover the metadata to any point-in-time, and can lose a significant amount of data on failure. Also, many storage systems do not support block-level snapshots and replication, or do not do so efficiently.

Creating a Portal Instance

Contact CTERA Support, and request the latest ESXi CTERA Portal OVA file.

The following procedure uses the vSphere Client. You can also use the vSphere Host Client. When using the vSphere Host Client, because the OVA file is larger than 2GB, you must unpack the OVA file, which includes the OVF file, VMDK and MF files. Use the OVF and VMDK files to deploy the CTERA Portal.

For the primary database server and secondary, replication, server, the portal instance is created with a fixed size data pool. If you require a larger data pool, which should be approximately 1% of the expected global file system size, you can extend the data pool as described in ESXi Specific Management.

To create a portal instance:

The following procedure is based on vSphere Client 7.0.3. The order of actions might be different in different versions.

-

In the vSphere Client console click File > Deploy OVF Template.

-

The Deploy OVF Template wizard is displayed.

-

Browse to the CTERA Portal OVA file and choose it.

-

Click NEXT.

-

Continue through the wizard specifying the following information, as required for your configuration:

- A name to identify the CTERA Portal in vCenter.

- The location for the CTERA Portal: either a datacenter or a folder in a datacenter.

- The compute resource to run the CTERA Portal.

-

Click NEXT to review the configuration details.

NoteClick Ignore in the warning to be able to proceed.

-

Click NEXT.

-

Select the virtual disk format for the CTERA Portal software and the storage to use for this software. Refer to VMware documentation for a full explanation of the disk provisioning formats. For Select virtual disk format select either Thick Provision Lazy Zeroed or Thick Provision Eager Zeroed according to your preference.

Thick Provision Lazy Zeroed – Creates a virtual disk in a default thick format. Space required for the virtual disk is allocated when the virtual disk is created. Data remaining on the physical device is not erased during creation, but is zeroed out on demand at a later time on first write from the virtual machine. Using the default flat virtual disk format does not zero out or eliminate the possibility of recovering deleted files or restoring old data that might be present on this allocated space.

Thick Provision Eager Zeroed – Creates a virtual disk that supports clustering features such as Fault Tolerance. Space required for the virtual disk is allocated at creation time. In contrast to the flat format, the data remaining on the physical device is zeroed out when the virtual disk is created. It might take much longer to create disks in this format than to create lazy zeroed disks. -

Click NEXT.

-

Select the Destination Network that the CTERA Portal will use.

-

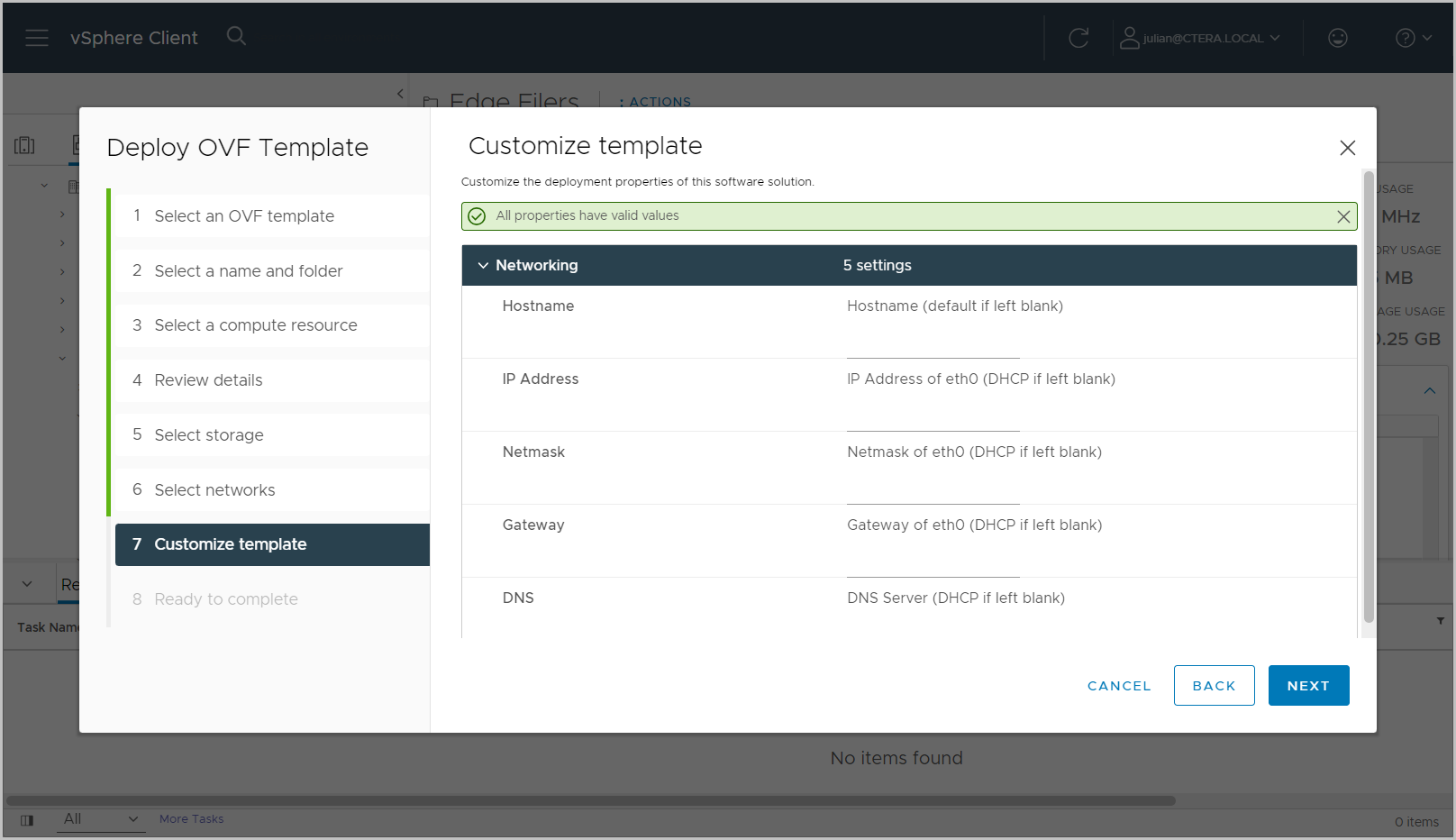

When installing a 8.1.x portal server from 8.1.1417.19, click NEXT to customize the template.

You can customize the following:- The name of the portal.

- The IP address, netmask, gateway and DNS server.

If you do not enter values, the default values for the portal are applied.

-

Click NEXT to review the configuration before creating the VM and then click FINISH.

The CTERA Portal is created and powered off. -

Power on the CTERA Portal virtual machine.

The virtual machine starts up and on the first start up a script is run to create a data pool from the data disk and then to load portal dockers on to this data pool. Loading the dockers can take a few minutes.NoteYou need a data pool on every server. The data pool disk is created automatically when you first start the virtual machine.

If the script to create the data pool does not successfully run, it will start on every boot until it completes. The script has a timeout which means it will exit if the data pool is not created within the timeout after boot time. If the data pool is not created, dockers required by the portal are not loaded to the data pool.

To make sure that the script completed successfully, before continuing, rundocker imagesto check that the docker images are available, including zookeeper, which is the last docker to load to the data pool.

If all the dockers do not load you need to run the script/usr/bin/ctera_firstboot.sh

Also, refer to Troubleshooting the Installation if the script does not complete successfully. -

For the primary database server and secondary, replication, server, right-click the portal VM in the navigation page and choose Edit Settings.

- Click ADD NEW DEVICE > Hard Disk.

A new hard disk is added to the list of hard disks. - Enter a size for the new hard disk.

Note

The minimum archive pool should be 200GB but it should be sized around 2% of the expected global file system size or twice the size of the data pool.

- Expand the New Hard disk item and for Location browse to a the datastore you want for the archive pool.

- Select the disk to use for the archive pool.

- Click OK.

- Click ADD NEW DEVICE > Hard Disk.

-

Log in as

root, using SSH or through the console.

The default password isctera321

You are prompted to change the password on your first login. -

For the primary database server and the secondary, replication, server, continue with Creating the Archive Pool.

-

If an IP is not assigned, configure network settings before starting the CTERA Portal services in the next step.

-

Start CTERA Portal services, by running the following command:

portal-manage.sh startNoteDo not start the portal until both the sdconv and envoy dockers have been loaded to the data pool. You can check that these dockers have loaded in

/var/log/ctera_firstboot.logor by runningdocker images

Creating the Archive Pool

You need to create an archive pool on the primary database server, and when PostgreSQL streaming replication is required, also on the secondary, replication, server. See Using PostgreSQL Streaming Replication for details about PostgreSQL streaming replication.

To create the archive pool:

- Log in as

root, using SSH or through the console. - Run

lsblkorfdisk -lto identify the disks to use for the archive pool. - Run the following command to create the archive pool:

portal-storage-util.sh create_db_archive_pool Device

where Device is the device name of the disk to use for the archive pool.

For example:portal-storage-util.sh create_db_archive_pool sdd

This command creates both a logical volume and an LVM volume group using the specified device. Therefore, multiple devices can be specified if desired. For example:portal-storage-util.sh create_db_archive_pool sdd sde sdfNoteWhen using NFS storage, before you can create the database archive pool, you have to disable the root squashing security setting for NFS export while setting up the database replication. In the NFS implementation use the

disable root squashsetting. After disabling root squash, run the following command to create the database archive pool:portal-storage-util.sh create_db_archive_pool -nfs <NFS_IP>:/export/db_archive_dir

where NFS_IP is the IP address of the NFS mount point.CTERA recommends re-enabling root squash for the NFS export after the database replication is set up and verified to be working.

Troubleshooting the Installation

You can check on the progress of the docker loads in one of the following ways to ensure that all the dockers are loaded: The last docker to load is called zookeeper:

- In

/var/log/ctera_firstboot.log - By running

docker imagesto check that the docker images are available. - By checking if

/var/lib/ctera_firstboot_completedis present with the date and time when the installation was performed.

If all the dockers do not load you need to run the script /usr/bin/ctera_firstboot.sh